Analyst A: Starting our discussion on the competitive landscape in AI inference technology, it’s clear that sparse network IP is a critical differentiator. NVIDIA has been a pioneer with their developments. How do you view the significance of sparse networks in this context?

Analyst B: Sparse network technology is a game-changer for AI inference. It enables more efficient processing by leveraging the inherent sparsity in neural networks, thus reducing computational load and improving energy efficiency. NVIDIA’s foray into this, especially with technologies like SNAP, underscores its importance.

Analyst A: Absolutely, NVIDIA’s commitment to sparse networks, demonstrated by their R&D investments and patent filings, such as the Sparse Neural Acceleration Processor, gives them a notable edge. But let’s not overlook other players like Samsung, Apple, and Qualcomm. They’ve also been making strides in AI and inference technology.

Analyst B: Right, Samsung’s collaboration with Naver to develop a more power-efficient AI chip than NVIDIA’s solutions is a testament to the growing competition. Their focus on low-power DRAM integration for enhancing inference tasks on AI applications shows a clear industry shift towards optimizing for efficiency.

Analyst A: And then there’s Apple, known for integrating AI into their devices seamlessly. While they might not publicly disclose as much about their inference chips or sparse network IP, their advancements in areas like augmented reality (AR) and machine learning frameworks hint at significant internal developments.

Analyst B: Qualcomm’s Cloud AI 100 is another example. Designed specifically for AI inference, it claims significant performance improvements over traditional hardware. Their approach to developing AI inference chips, drawing from their neural processing units in Snapdragon SoCs, showcases a broad industry effort to cater to AI workloads efficiently.

Analyst A: It’s fascinating to see how each company is positioning itself within the AI inference space. NVIDIA’s early investments and continued focus on specialized hardware for AI inference, like sparse networks, certainly set them apart. However, the strategies of Samsung, Apple, and Qualcomm indicate a diverse and competitive market.

Analyst B: Indeed, the diversity in strategies highlights the multifaceted nature of the AI inference market. NVIDIA’s advantage lies in its specialized processing capabilities, but the efforts of Samsung, Apple, and Qualcomm show that there are multiple pathways to achieving efficiency and performance in AI inference.

Analyst A: The question remains: how will these technologies evolve, and how will market dynamics shift as more companies enter the fray or as existing players further refine their technologies?

Analyst B: Market dynamics will likely continue to evolve rapidly. As AI applications become more pervasive, the demand for efficient inference technology will only grow. Companies that can provide scalable, power-efficient, and high-performance solutions, whether through sparse network IP or other innovations, will lead the market.

Analyst A: It’s a race that will be fascinating to watch. NVIDIA may currently lead with its sparse network IP and dedicated hardware like SNAP, but the innovation from other tech giants and the broader focus on AI efficiency and performance means the competitive landscape is anything but static.

Analyst B: Absolutely, the future of AI inference technology is bright, with sparse network IP playing a pivotal role. As companies continue to innovate, the advancements in this space will not only drive competition but also enable new applications and capabilities that we can only begin to imagine.

Analyst A: Let’s delve deeper into what exactly sparse network technology entails, especially from a technological standpoint. We’ve referenced several academic papers in our discussions. Could you summarize the key technological insights from those?

Analyst B: Of course. Sparse network technology fundamentally revolves around the concept of sparsity in neural networks. Sparsity refers to the presence of a significant number of zero-value elements within the data or model parameters. The paper “Exploring the Regularity of Sparse Structure in Convolutional Neural Networks” provides an excellent foundation, as it was written by the leading engineer at Nvidia who developed Ampre architecture. Also “Sparsity in Deep Learning: Pruning and growth for efficient inference and training in neural networks” gives a nice overview of the technology.

Analyst A: That paper was quite enlightening. It demonstrated how sparsity could be leveraged to reduce computational complexity by skipping zero-valued elements during the network’s inference phase, correct?

Analyst B: Exactly. By exploiting the sparsity within neural networks, it’s possible to achieve significant reductions in memory usage and computational resources. This is because operations involving zero-values do not affect the outcome, allowing for those computations to be bypassed entirely.

Analyst A: I found the discussion on different granularities of sparsity particularly interesting. The paper outlines how coarse-grained and fine-grained sparsity differ, impacting both the efficiency of hardware acceleration and the accuracy of the model.

Analyst B: Indeed, the granularity of sparsity is a crucial factor. Coarse-grained pruning, like removing entire filters or channels, offers more structured sparsity patterns that are easier for hardware accelerators to exploit. However, it can be more challenging to maintain model accuracy compared to fine-grained pruning, which targets individual weights.

Analyst A: It seems like a delicate balance between maintaining the model’s predictive power and optimizing for computational efficiency. The paper also discussed methods to measure and achieve optimal sparsity levels without significant accuracy loss, right?

Analyst B: Precisely. The authors proposed various techniques for pruning and dynamically adjusting the sparsity levels during the training process. This ensures that the final model retains its effectiveness while benefiting from the efficiencies that sparsity offers.

Analyst A: And what about the hardware aspect? How does sparse network technology influence the design of AI accelerators?

Analyst B: Sparse networks necessitate specialized hardware designs that can efficiently handle sparse data structures. This includes innovations in memory architecture to store sparse matrices and processing units capable of skipping zero-valued computations. NVIDIA’s SNAP, for example, is designed specifically to accelerate sparse neural network computations.

Analyst A: It’s clear that sparse network technology not only promises significant performance and efficiency gains but also poses unique challenges in terms of model training and hardware design.

Analyst B: Absolutely. The ongoing research and development in this area are pivotal. As we push the boundaries of what’s possible with AI and machine learning, leveraging sparsity effectively could be key to unlocking new levels of performance and efficiency.

Analyst A: The advancements in sparse network technology will undoubtedly shape the future of AI inference, driving innovation across the board. It’s an exciting field with much potential still to be explored.

Analyst A: Considering our discussion on sparse network technology, let’s talk about the critical role of hardware integration, like NVIDIA’s SNAP, in leveraging this technology. How do you see hardware accelerators impacting the performance of sparse networks?

Analyst B: Hardware accelerators like SNAP are pivotal for unleashing the full potential of sparse network technology. The integration of hardware specifically designed to handle sparse data structures allows for direct, efficient processing of neural networks where sparsity is a key characteristic. This hardware-software synergy is crucial for optimizing computational resources and energy efficiency.

Analyst A: I agree. The ability of these accelerators to bypass zero-valued computations and efficiently handle sparse matrices directly impacts the speed and power consumption of AI inference tasks. It seems like a specialized hardware approach could significantly advance the deployment of AI technologies in real-world applications.

Analyst B: Absolutely, and if such technologies are patented, it could have profound implications for the entire industry. A patented hardware accelerator like SNAP not only solidifies a company’s competitive edge but also sets a benchmark for how sparse networks are utilized across devices embedding inference technology.

Analyst A: That’s a compelling point. Nvidia has a pending patent 17/111875. Patents in this space could dictate the direction of future developments in AI hardware. How do you envision this influencing the broader ecosystem of devices and applications relying on AI inference?

Analyst B: If key technologies like SNAP are patented, it may lead to a scenario where access to the most efficient inference technologies is restricted, pushing companies to either license these technologies or invest heavily in alternative solutions. This could accelerate innovation in some areas but might also lead to fragmentation in how AI inference is approached across the industry.

Analyst A: It’s an interesting dynamic. On one hand, patents protect and reward innovation, encouraging companies to push the boundaries of what’s possible. On the other, they could potentially limit the widespread adoption of the most efficient technologies, especially in a field as critical as AI.

Analyst B: Indeed, the balance between fostering innovation and ensuring broad access to technology is delicate. The impact of patented hardware on the AI landscape will largely depend on how companies choose to leverage their intellectual property—whether they create walled gardens or foster ecosystems through partnerships and licensing.

Analyst A: Looking ahead, the integration of specialized hardware like SNAP into devices could significantly change the game for AI applications, from mobile phones to autonomous vehicles. The efficiency gains in inference tasks could enable more sophisticated AI functionalities to be deployed directly on devices, even those with limited power resources.

Analyst B: The future certainly looks promising. As hardware accelerators become more sophisticated and integrated into a wider array of devices, we’re likely to see a new wave of AI applications that are not only more powerful but also more efficient. The role of patented technologies like SNAP will be crucial in shaping this future, driving both the capabilities and the competitive landscape of AI inference technology.

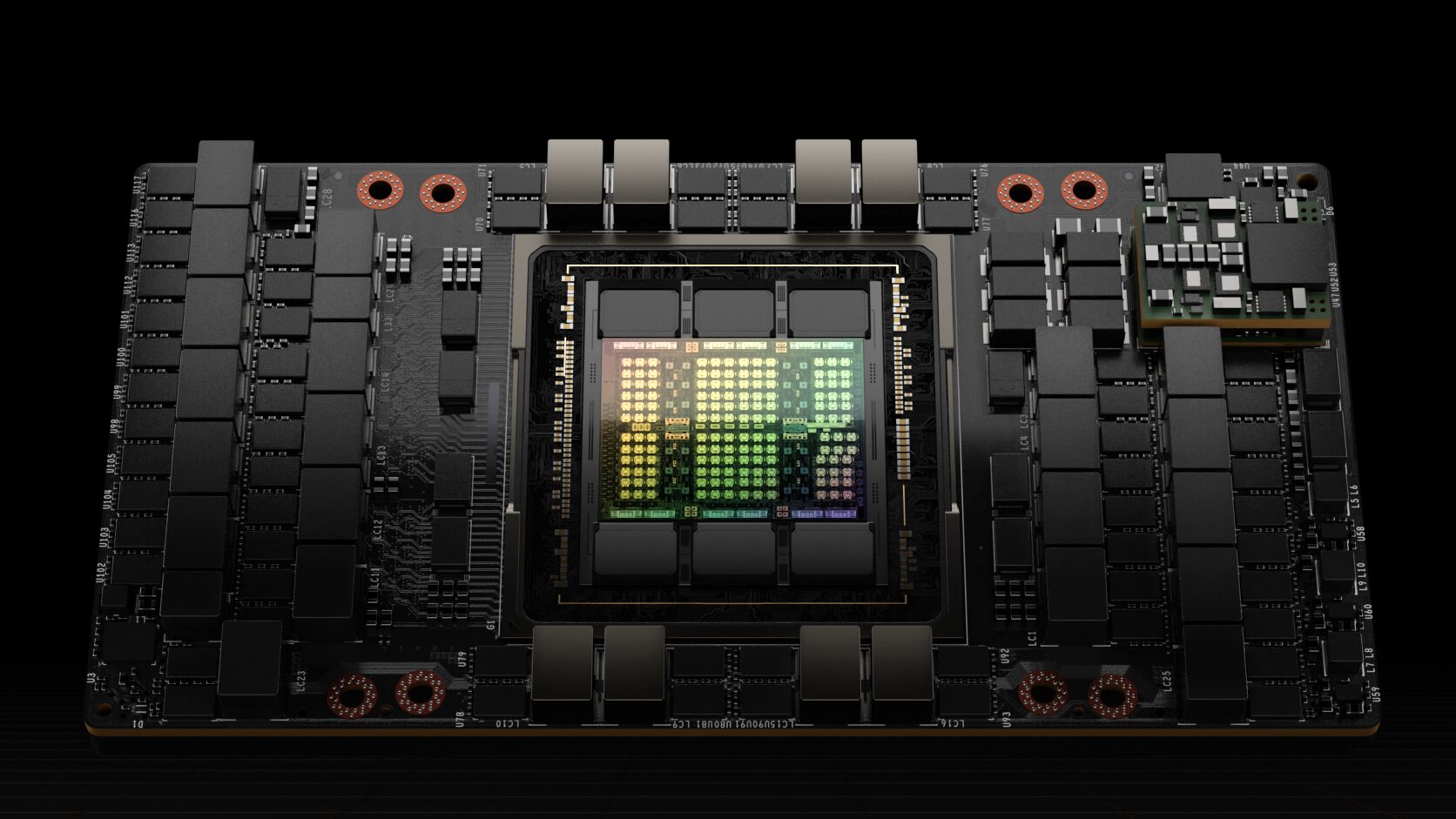

Analyst A: Let’s explore NVIDIA’s advancements in sparse network technology, focusing on their Hopper and Ampere architectures. The Hopper architecture introduces a new feature called the Tensor Memory Accelerator and expands the hierarchy of CUDA threads. This reduces the number of CUDA threads needed for managing memory bandwidth, allowing more threads to focus on general computations.

Analyst B: Yes, the Hopper architecture, especially with the H100 GPU, is fascinating. It features new Transformer Engines and Hopper FP8 Tensor Cores, which are designed to speed up AI training and inference. Compared to the previous generation A100, it can accelerate AI training up to 9 times and AI inference up to 30 times.

Analyst A: Moving to the Ampere architecture, it focuses on leveraging sparsity to accelerate AI inference and machine learning. Equipped with third-generation Tensor Cores, it utilizes fine-grained sparsity to provide up to twice the throughput for deep learning’s core matrix multiplication and accumulation tasks, without sacrificing accuracy.

Analyst B: Indeed, and it’s particularly efficient for running state-of-the-art natural language processing models like BERT, executing them 50% faster compared to using dense mathematics. This shows NVIDIA’s commitment to optimizing the use of sparsity in AI tasks.

Analyst A: Let’s also talk about cuSPARSELt and SNAP. cuSPARSELt is a library that accelerates sparse GEMM (general matrix multiplication) on the NVIDIA A100 GPU. It offers significant performance improvements for GEMM operations of varying layer sizes found in pruned models, achieving speedups of 1.3 to 1.6 times for some layers of the BERT-Large model.

Analyst B: And SNAP, the Sparse Neural Acceleration Processor, utilizes an architectural structure specialized for unstructured sparse deep neural networks. It maintains an average computational utilization of 75% by employing parallel associative search, making deep learning applications more efficient on resource and energy-constrained hardware platforms.

Analyst A: These technological innovations underscore NVIDIA’s efforts to enhance the efficiency and performance of sparse networks. Both the Hopper and Ampere architectures play a central role in this, focusing on enhancing the use of sparsity for AI inference and machine learning, which is pivotal for the next generation of computing technologies.

Analyst B: Absolutely. The development of advanced architectures like Hopper, along with specialized hardware like SNAP, illustrates NVIDIA’s leadership in AI and HPC fields. By leveraging sparsity, NVIDIA not only boosts computational efficiency and performance but also sets the direction for future research and development in this space.

Analyst A: The implications for devices that embed inference technology are profound. If technologies like SNAP are patented, they could significantly influence all devices relying on inference technology, marking a pivotal shift in how AI applications are deployed and executed across various platforms.

Analyst B: Indeed, the strategic integration of such hardware accelerators is critical for leveraging sparse network technology effectively. NVIDIA’s approach not only drives innovation within the company but also shapes the competitive landscape, pushing the entire industry towards more efficient and powerful AI solutions.